News

Industrial

Where will autopilot car sensor technology go?

According to James Consulting, today's self-driving cars rely on a variety of sensors to provide the necessary spatial awareness for automated driving without driver intervention. Innovative radar technology complements redundant sensors to drive the development of autonomous vehicles into a revolutionary next phase that accelerates into our daily lives.

Self-driving car sensor: the road is long and the road is long

Today's self-driving cars rely on a wide variety of sensors to provide the necessary spatial awareness for automated driving without driver intervention. Current sensor solutions rely heavily on visible light sensors to provide three-dimensional (3D) detail of the surrounding environment. These sensors are subject to similar restrictions to humans, that is, in low visibility situations (night, rain, snow, fog, dust, low light, etc.), the ability to sense distance is limited or performance is degraded.

According to the latest data, American citizens travel 3.5 trillion miles a year, and one car crashes every 90 million miles. Currently, in actual tests, autonomous vehicles cover only 12 million miles, requiring a manual “takeover” every 5,600 miles. There is still a long way to go before car sensors and artificial intelligence (AI).

Artificial intelligence (AI) cannot solve problems independently

Most vehicles on the market today still rely on the driver to control the vehicle, but the Advanced Driver Assistance System (ADAS), which provides autonomous safety protection, is in the L1-L2 class at the automatic driving level. The automotive industry is working to provide a higher level of autonomous driving, and Tesla recently announced that it plans to introduce new autonomous driving features earlier this year, with new features in navigation and autonomous driving software improvements.

But can advances in artificial intelligence (AI) and machine learning fully meet the needs of fully automated vehicles?

AI and machine learning have become part of everyday life, but AI still has a lot of problems that cannot be solved independently. The key to the space perception required for an L3-L5 self-driving car depends on the fidelity of the vehicle's sensor data. The higher the fidelity, the better the end result.

Future autonomous vehicles need to improve the level of spatial perception and discrimination in all weather conditions. It also has to be able to distinguish objects that are close together in order to accurately map the x, y, and z positions of each object.

The importance of the sensor

As human beings, we mainly use visual combination of hearing, touch, smell and balance to ensure safe action in daily life. Imagine how difficult it is to act when one or more of our senses are blocked or malfunctioning. Our brains must work harder to compensate for the risk of misjudgment caused by reduced fidelity.

A good example is when we can't see it. While moving, we can compensate for vision by using balance, touch, and sound, but this still has significant ambiguity and reduces our certainty about the surrounding environment, so our actions are much slower.

So, in short, the higher the data fidelity from a sensor or combination of sensors, the less work the brain (artificial intelligence) has to solve the problem, and the lower the ambiguity, the higher the relative confidence and security.

Current sensor limitations

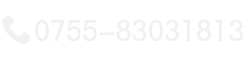

To date, autonomous vehicles have focused primarily on mimicking many of human functions, including replicating key human or animal senses. Figure 1:

• Vision – Stereo cameras and lidars (LiDAR) for high-resolution 3D space perception

• Sound – ultrasonic and radar sensors used to determine the position, velocity and direction of an object

• Balance – inertia and gyro sensors for inductive motion

• Location - magnetometer and GPS for determining location and direction

When these sensory sensors are integrated with AI, current autonomous vehicles have sufficient confidence in known environments when weather conditions are good, but for us humans, there is not enough confidence to have full confidence in these technologies.

Future sensor

So what progress does the automotive industry need to achieve in the future to gain the confidence needed to achieve the highest levels of autopilot (L4 and L5)? The current hotspots of a large number of hype are in the development of AI, and AI should be able to provide comparable or enhanced responses to everyday situations. To do this, the data that AI needs for sensors goes far beyond simple c

pying of human senses.

In order to improve all-weather security, future sensors need to operate well in all weather conditions, relying less on visible light sensors as the primary sensor, and using other cutting-edge technologies such as millimeter-wave radar.

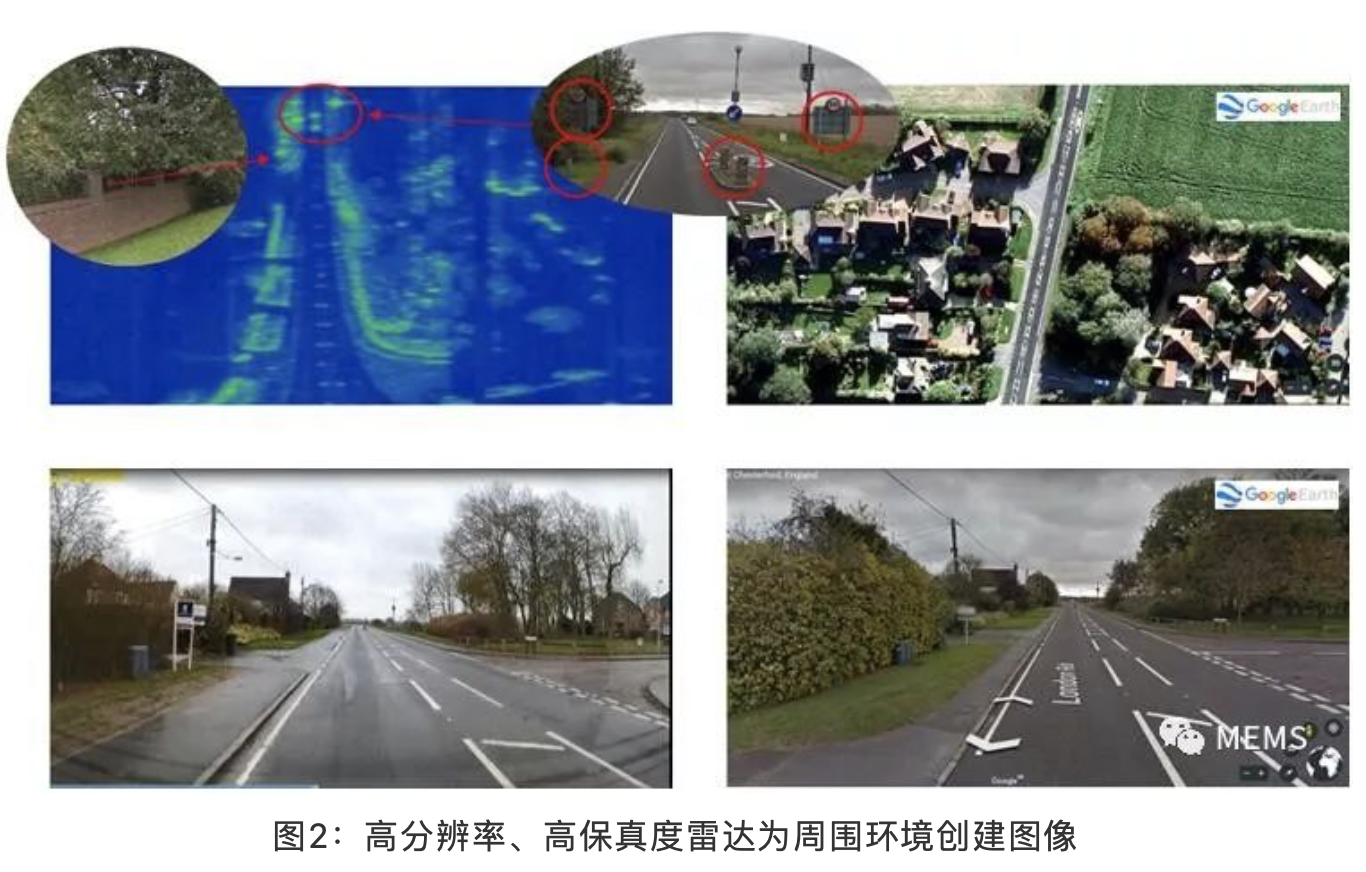

High-resolution, high-fidelity radars are being developed, and today's radars are capable of creating detailed images of the surrounding environment in all weather conditions. Seeing is believing, as shown in Figure 2.

This exciting and innovative radar technology is the next generation of revolutionary technology that drives the development of autonomous vehicles and accelerates into our daily lives.

@Clem Robertson

Collect

Collect

Navigate:

Navigate: